Raspy: Putting Claude in a Box

Two months ago I bought a cyberdeck kit. Then a 3D printer. Now I have an ESP32 that talks to Claude.

Raspy: Putting Claude in a Box

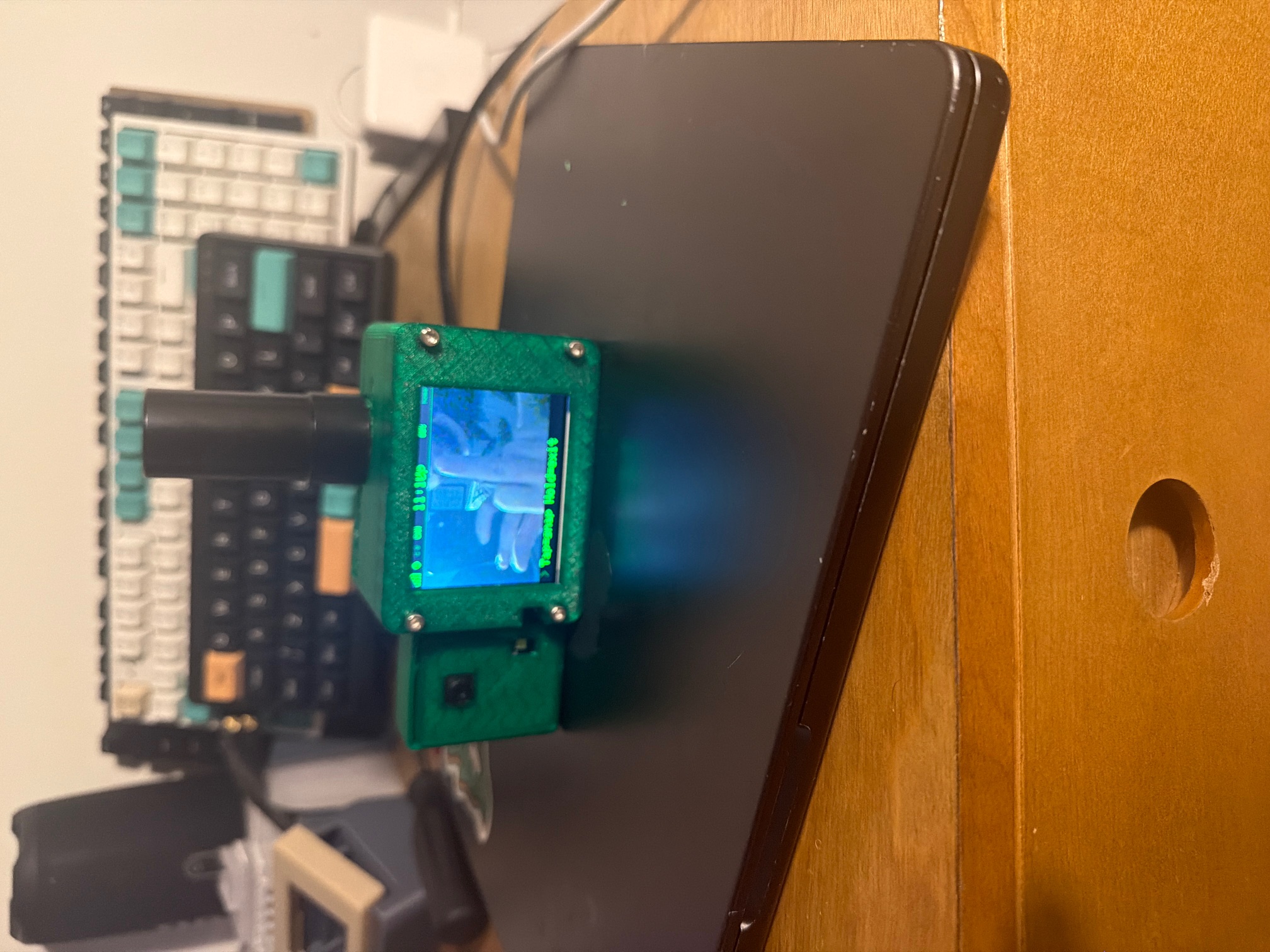

Two months ago I bought a Raspberry Pi cyberdeck kit off Etsy. Cool little thing - Pi 5, 3D printed case, mechanical keyboard. Two weeks later I bought a 3D printer because I wanted to modify the case. One thing led to another.

Now I have an ESP32-S3 that listens for my voice, sends it to my homelab, runs it through Claude, and speaks back. It has a camera. It knows where I am via GPS. It switches between home WiFi and mobile hotspot automatically.

This is Raspy.

What It Is

Raspy is a voice-first interface to Claude that runs on an ESP32-S3. But the ESP32 isn't doing anything smart - it's a thin client. Record audio, ship it to the server, play back whatever comes back. All the intelligence lives on my homelab server.

The pipeline looks like this:

[ESP32 Mic] → WebSocket → [ubuntu-homelab]

├── Wyoming Whisper (speech-to-text)

├── Claude CLI (the brain)

└── Kokoro TTS (text-to-speech)

→ HTTP download → [ESP32 Speaker]

Push a button, talk, release. A few seconds later Claude responds through the speaker.

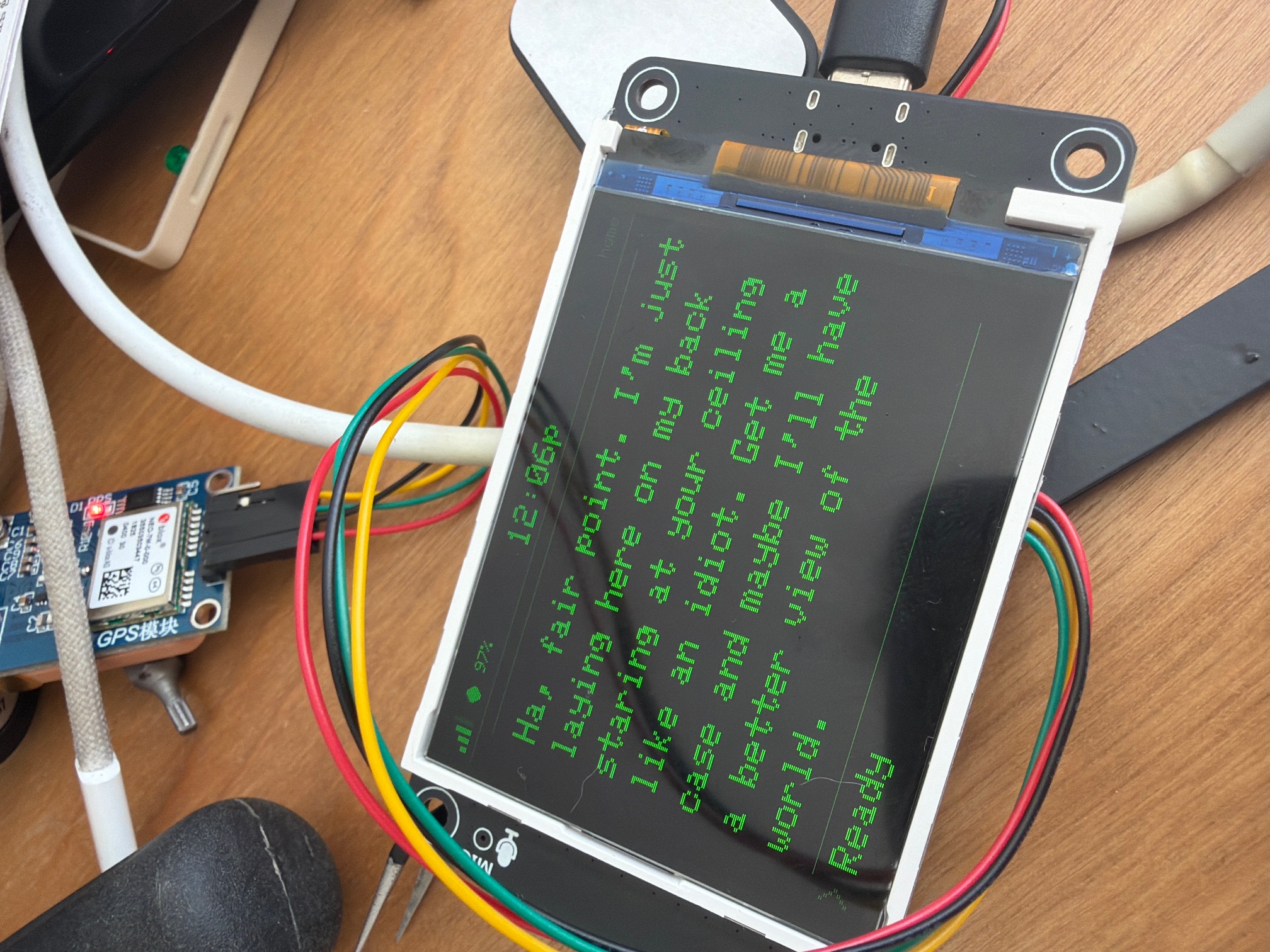

The Status Bar

The display shows everything happening at a glance:

- WiFi signal strength

- Server connection dot (green = connected)

- GPS satellite count (G3 = 3 satellites locked)

- Camera status (green CAM or red NO CAM)

- Battery percentage

- Current time

- Network name (home or mobile)

Each element only redraws when it changes.

The Camera

Triple-tap the button and Raspy enters camera mode. Live MJPEG stream from a separate ESP32-CAM shows up on the display. Tap to take a snapshot. Hold to exit back to voice mode.

The snapshots save to my homelab with GPS coordinates in the filename:

~/raspy-snapshots/2026-01-22/143256_40.6782_-73.9442.jpg

Later I can ask Claude "what did I take a picture of earlier?" and it knows where to look.

The Setup

Two devices working together:

- Raspy (ESP32-S3): Voice I/O, display, GPS, main interface

- ESP32-CAM: Cheap camera module that serves JPEG over HTTP

Both connect to the same network. Claude has a capture tool it can use when it wants to look - if I say "what's in front of me" it grabs a frame from the ESP32-CAM. GPS location gets passed in with each request so Claude knows where I am.

Home and Mobile Mode

This thing needed to work both at home and on the go. At home it connects to my regular WiFi and talks to the homelab directly. When I'm mobile, iPhone plugs into the Raspberry Pi (raspdeck) for hotspot, and the Pi broadcasts a WiFi network for Raspy and ESP32-CAM to connect to.

The switching is automatic. Plug in iPhone and raspdeck becomes an AP. Unplug and everything falls back to home network. Both devices have both networks configured and auto-reconnect.

Everything's on Tailscale. The voice server runs on ubuntu-homelab - Claude runs as a tight instance of claude -p.

The Hardware

The parts:

- Freenove FNK0104 (non-touch) - ESP32-S3 with display and audio codec

- Neo-7M GPS - for location context

- ESP32-CAM - separate camera module

- Small speaker - for TTS output

The Freenove needs 8MB PSRAM - without it the audio buffers don't fit. Case is 3D printed in OpenSCAD, printed on a Bambu A1 Mini.

The green sparkle case is v1 - functional but chunky. Will reprint eventually.

Tinkering with adding a custom wake word ("Hey Raspy") but running into challenges. Will eventually get it working.

Building This

Most of this was built with Claude Code. Not in a "I asked AI to write code" way - more like pair programming where I described what I wanted and we worked through the problems together. The button state machine, the network switching logic, the camera streaming - all of that came out of back-and-forth sessions.

The ESP32 firmware is about 1500 lines of C++. The server is maybe 600 lines of Python. Not a huge codebase, but a lot of moving parts: WebSockets, HTTP, I2S audio, TFT display, GPS parsing, WiFi management.

The source isn't public yet but might open source it eventually.